At the end of last week, a few of us from QuickLearn Training hosted a webinar with an overview of a few of the new features in BizTalk Server 2016. This post serves as a proper write-up of the feature that I shared and demonstrated – Shared Access Signature Support for Relay Adapters. If you missed it, we’ve made the full recording available on YouTube here. We’ve also clipped out just the section on Shared Access Signature Support for Relay Adapters over here – which might be good to watch before reading through this post.

While that feature is not the most flashy or even the most prominent on the What’s New in BizTalk Server 2016 page within the MSDN documentation, it should come as a nice relief for developers who want to host a service in BizTalk Server while exposing it to consumers in the cloud — with the least amount of overhead possible.

Shared Access Signature (SAS) Support for Relay Adapters

You can now use SAS authentication with the following adapters:

- WCF-BasicHttpRelay

- WCF-NetTcpRelay

- WCF-BasicHttp*

- WCF-WebHttp*

* = SAS for these adapters is used only when sending messages as a client (the adapters can still be used as receive adapters, just not to host Azure Relay enabled endpoints)

Why Use SAS Instead of ACS?

Before BizTalk Server 2016, our only security option for the BasicHttpRelay and NetTcpRelay adapters was the Microsoft Azure Access Control Service (ACS).

One of the main scenarios that the Access Control Service was designed for was Federated Identity. For simpler scenarios, wherein I don’t need claims mapping, or even the concept of a user, using ACS adds potentially unnecessary overhead to (1) the deployed resources (inasmuch as you must setup an ACS namespace alongside the resources you’re securing), and (2) the runtime communications.

Shared Access Signatures were designed more for fine-grained and time-limited authority delegation over resources. The holder of a key could sign and distribute small string-based tokens that define a resource a client could access and timeframe within which they were allowed to access the resource.

Hosting a Relay Secured by Shared Access Signatures

In order to expose a BizTalk hosted service in the cloud via Azure Relay, you must first create a namespace for the relay – a place for the cloud endpoint to be hosted. It’s at the namespace level that you can generate keys used for signing SAS tokens that allow BizTalk server to host a new relay, and tokens that allow clients to send messages to any of that namespace’s relays.

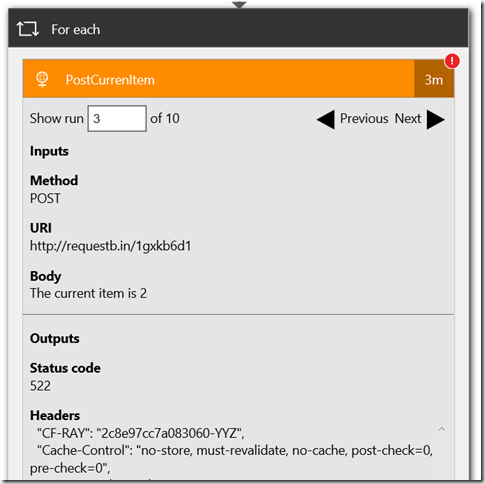

The generated keys are associated with policies that have certain associated claims / rights that each is allowed to delegate.

In the example above, using the key associated with the biztalkhost policy, I would be able to sign tokens that allow applications to listen at a relay endpoint within the namespace, but I would not be able to sign tokens allowing applications to Send messages to the same relays.

Clicking a policy reveals its keys. Each policy has 2 keys that can be independently refreshed, allowing you to roll over to new keys while giving a grace period in which the older keys are still valid.

Either one of these keys can be provided in the BizTalk Server WCF-BasicHttpRelay adapter configuration to host a new relay.

Configuring the Security Settings for the WCF-BasicHttpRelay Adapter

When configuring the WCF-BasicHttpRelay adapter, rather than providing a pre-signed token with a pre-determined expiration date, you provide the key directly. The adapter can then sign its own tokens that will be used to authorize access to the Relay namespace and listen for incoming connections. This is configured on the Security tab of the adapter properties.

If you would like to require clients to authenticate with the relay before they’re allowed to send messages, you can set the Relay client authentication type to RelayAccessToken:

From there it’s a matter of choosing your service endpoint, and then you’re on your way to a functioning Relay:

Once you Enable the Receive Location, you should be able to see a new WCF Relay with the same name appear in the Azure Portal for your Relay namespace. If not, check your configuration and try again.

Most importantly, your clients can update their endpoint addresses to call your new service in the cloud.

The Larger Picture: BizTalk Hybrid Cloud APIs

One thing to note about this setup, however, is that the WCF-BasicHttpRelay adapter is actually not running in the Isolated Host. In other words, rather than running as part of a site in IIS, it’s running in-process within the BizTalk Server Host Instance itself. While that provides far less complexity, it also sacrifices the ability to run the request through additional processing before it hits BizTalk Server (e.g., rate limiting, blacklisting, caching, URL rewriting, etc…). If I were hosting the service on-premises I would have this ability right out of the box. So what would I do in the cloud?

Using API Management with BizTalk Server

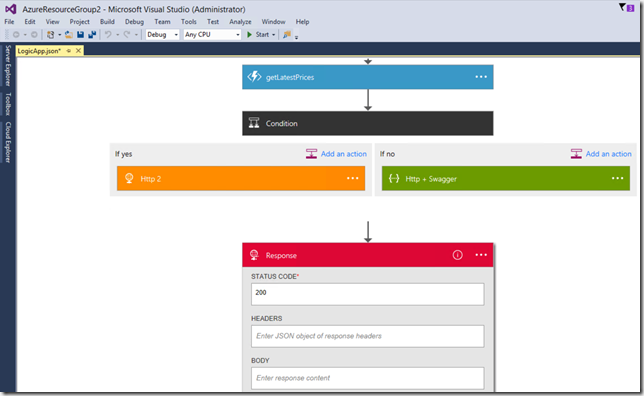

In the cloud, we have the ability to layer on other Azure services beyond just using the Azure Relay capability. One such service that might solve our dilemma described in the previous section would be Azure API Management.

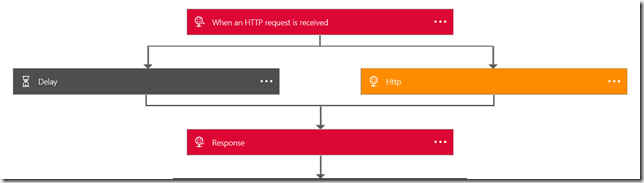

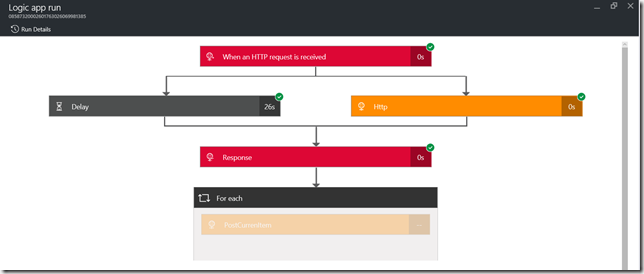

Rather than having our clients call the relay directly (and thus having all message processing done by BizTalk Server), we can provide API Management itself a token to access to our BizTalk Hosted service. The end users of the service wouldn’t know the relay address directly, or have the required credentials to access it. Instead they would direct all of their calls to an endpoint in API Management.

API Management, like IIS, and like BizTalk Server, provide robust and customizable request and response pipelines. In the case of API Management, the definitions of what happens in these pipelines are called “policies.” There are both inbound policies and outbound policies. These policies can be configured for a whole service at a time, and/or only for specific operations. They enable patterns like translation, transformation, caching, and rewriting.

In my case, I’ve designed a quick and dirty policy that replaces the headers of an inbound message so that it goes from being a simple GET request to being a POST request with a SOAP message body. It enables caching, and at a base level implements rate-limiting for inbound requests. On the outbound side it translates the SOAP response to a JSON payload — effectively exposing our on-premises BizTalk Server hosted SOAP service as a cloud-accessible RESTful API.

So what does it look like in action? Below, you can see the submission of a request from the client’s perspective:

How does BizTalk Server see the input message? It sees something like this (note that the adapter has stripped away the SOAP envelope at this point in processing):

What about on the outbound side? What did BizTalk Server send back through the relay? It sent an XML message resembling the following:

If you’re really keen to dig into the technical details of the policy configuration that made this possible, they’re all here in their terrifying glory (click to open in a new window, and read slowly from top to bottom):

The token was generated with a quick and dirty purpose-built simple console app (the best kind).

Tips, Tricks, and Stumbling Blocks

Within the API Management policy shown above, you may have noticed the CDATA sections. This is mandatory where used. You’ll end up with some sad results if you don’t remember to escape any XML input you have, or the security token itself which includes unescaped XML entities.

Another interesting thing with the policy above is that the WCF-BasicHttpRelay adapter might choke while creating a BizTalk message out of the SOAP message constructed via the policy above (which includes heaps of whitespace so as to be human readable), failing with the following message The adapter WCF-BasicHttpRelay raised an error message. Details “System.InvalidOperationException: Text cannot be written outside the root element.

This can be fixed quite easily by adjusting the adapter properties so that they’re looking for the message body with the expression set to “*”.

Questions and Final Thoughts

During the webinar the following questions came up:

- One audience member asked, “Is https supported?”

- A: Yes, for both the relay itself and the API management endpoint.

- Another audience member inquired, “Maximum size is 256KB; I was able to get a response about 800 KB; Is that because BizTalk and Azure apply the compression technology and after compression the 800KB response shrinks to about 56KB?”

- A: The size limit mentioned applies to brokered messages within Service Bus (i.e., those you would receive using the SB-Messaging adapter). Azure Relay is a separate service that is not storing the message for any period of time – messages are streamed to the service host. Which means if BizTalk Server disconnects, the communication is terminated, but on the plus side you’re not having to worry about how much space you’re allowed to use per message in the cloud. There’s a nice article comparing the two communication styles over here.

I hope this has been both helpful and informative. Be sure to keep watching for more of QuickLearn Training’s coverage of New Features in BizTalk Server 2016, and our upcoming BizTalk Server 2016 training courses.