The first day of the London BizTalk Summit 2015 was a high paced, jammed packed, highly informative, and outstanding1 way to kick-off an event. I sat all the sessions, and ended up with 25 pages of notes, and nearly 200 photos. I have no idea how I’m going to pull this post together in a timely fashion, so I’m just going to dive right in and do what I can.

Service Bus Backed Code-based Orchestration using Durable Task Framework

The morning kicked off with Dan Rosanova delivering his session a little bit early, and in place of Josh Twist’s planned keynote (Josh had taken ill and was unable to make it to the conference). Dan presented the Durable Task Framework – an orchestration engine built on top of Azure Service Bus for durability and the Task Parallel Library for .NET developer happiness.

While not necessarily one of the big new things in the world of BizTalk (i.e., it has been around and doing great since 2013), it is one of those things that allows you to implement some of the same patterns as BizTalk Server, whilst solving some of the same problems that Orchestrations tackle, but with tracing and persistence provided by the cloud, and a design-time experience that feels comfortable to those averse to using mice – pure, real, unadulterated C# code.

The framework provides the following out-of-the-box capabilities:

- Error handling & compensation

- Versioning

- Automatic retries

- Durable timers

- External events

- Diagnostics

That last paragraph is doing the framework a great disservice, and it completely understates the value. Please understand that this is really cool stuff, and one of those things that you just have to see. I’m really happy to have seen the framework featured here, it is definitely now on my list of fun things to experiment with.

I really love Azure Service Bus – it makes me very happy. It’s also making a good showing of Microsoft’s cloud muscle – recently crossing the 500 billion message per month barrier. That’s nuts!

On a side note, I must say that Dan is an excellent presenter, and I would recommend checking out any talk of his that you have the opportunity to hear. POW!

API Apps for IBM Connectivity

Following Dan’s talk, Paul Larsen took the stage to talk about API Apps for IBM Connectivity. Some time was spent recapping the Azure App Service announcement from a few weeks back for those in attendance who were unfamiliar, but then he dove right into it.

The first connector he discussed was the MQ Connector – an API App for connecting applications through Azure App Service to IBM WebSphere MQ server. It can reach all the way on-prem using VPN or Hybrid Connection. The connector uses IBM’s native protocols and formats, while being implemented as a completely custom, fully managed, Microsoft-built client – something that was needed to most efficiently build out the capability given the host. That’s really impressive, especially given the compressed timeline, and complete paradigm shift thrown into the mix. There are some limitations though – compatibility is only for version 8, there is no backwards compatibility yet.

He also took some time to demo the DB2 connector and the Informix connector that are currently in preview, and to present a roadmap that included a TI Connector, TI Service (Host Initialized), DRDA Service (Host Initialized), and of course Host Integration Server v10.

Karandeep Anand Rescues the Keynote

Karandeep Anand, Partner Director of Program Management at Microsoft, flew in from Seattle at the last moment to ensure that the keynote session could still be delivered. He came fresh from the airplane, and started immediately into his talk.

One of the first things he said as he was working through the slides introducing the Azure App Service platform was, “We’re coming to a world where it doesn’t take us 3 years to come out with the next version but 3 weeks to come out with the next feature.” This is so true, and really only fully possible to do with a cloud-based application.

If that statement has you concerned, keep calm. This is something a model that Microsoft has already accomplished on a massive scale with Team Foundation Server. Since the 2012 version of the server product, there has been a mirror of the capabilities in the cloud (first Team Foundation Service, and now Visual Studio Online) providing new features, enhanced functionality, and bug fixes on a 3-week cadence, followed-up by a quarterly release cycle for on-prem.

It’s a model that can definitely work, and I’m excited to see how it might play out on the BizTalk side of things (especially in a micro services architecture where each API App that Microsoft builds is individually versioned and deployed on potentially a per-application basis — not just per-tenant).

It was a model of necessity though when the challenge was issued: “In less than a quarter, we will rebuild our entire integration stack to be aligned with the rest of the app platform.”

Overall, Karandeep did a good job to demonstrate that the Azure App Service offering isn’t just following a new fad, or achieving buzzwords compliance, but instead about learning from past mistakes and applying those learnings.

In discussing the key learnings, there were some interesting things that came out of building BizTalk Services:

- Validated the brand – there is power in the BizTalk brand name, it is synonymous with Integration on the Microsoft platform

- Validated cloud design patterns – MSDTC works on-prem, but doesn’t make a lot of sense in the cloud

- Hybrid is critical and a differentiator

- Feature and capability gaps (esp. around OOTB sources/destinations)

- Pipeline templates, custom code support

- Long running workflows, parallel execution

- Needs a lot more investment

As a result of all of the lessons learned (not just with BizTalk Services), the three guiding principles of building out Azure App Service became: (1) Democratize Integration, (2) Rich Ecosystems, (3) iPaaS Leader.

Shortly after working through the vision, Stephen Siciliano, Sr. Program Manager at Microsoft, and Prashant Kumar came up to assist in demonstrating some of the capabilities provided by the Azure App Service platform.

Stephen’s demo focused on flexing the power of the Logic App engine to compose API Apps and connect to social and SaaS providers (extract tweets on a schedule and write to dropbox, and then looping over the array of tweets rather than only grabbing the first one), while Prashant’s demo demonstrated the use of BizTalk API Apps to handle EAI scenarios in the cloud (calling rules to apply a discount to an XML-based order received at an HTTP location, and then routing the order to a SQL server database).

App Service Intensives Featuring Stephen Siciliano, Prashant Kumar, and Sameer Chabungbam

Right after the keynote, there were three back-to-back sessions (with lunch packed somewhere in between) that provided some raw information download of things that have been out there for discovery, but not yet mentioned directly. I was really pleased with being able to see it live, because it’s going to be awhile before all of the information shown can be documented and disseminated fully by the community.

We’ve been hard at work at QuickLearn on our new Azure App Service class – with the first run just 3 weeks away! For a lot of the conceptual material we’ve relied heavily on the language specification for Logic Apps that was posted not too long ago – trying to understand the capabilities of the engine, rather than the limitations of the designer. So one of the big takeaways for me from Stephen’s session was seeing a lot of those assumptions that we’ve had validated, and then seeing other things pointed out that weren’t as clear at first glance.

Essentially he discussed the expression language (see the language spec for full coverage), the mechanics of triggering a Logic App (see here for full coverage), and the one that stuck out to me: the three ways to introduce dependencies between actions.

Actions aren’t what they seem in the designer (if you follow me on twitter, you would have seen the moment I first learned that thanks to Daniel Probert’s post). They can have complex relationships and dependencies, which can be defined either explicitly or implicitly. The way that Stephen laid it out, I think, was done really well, and certainly sparked my imagination. So here it is, the three ways you can define dependencies for actions:

- Implicitly – whenever you reference the output of an action you’ll depend on that action executing first

- Explicit “dependsOn” condition – you can mark certain actions to run only after previous ones have completed

- Explicit “expression condition – a complex function that evaluates properties of other actions

“expression” (ex: only execute if there was a failure detected / CBR scenarios)

For those that are lost at this point (confused as to why I’m so excited here): If you don’t have a dependency between two steps, they will run in parallel. You can have a fork and rejoin if the rejoined point has a dependency on the last step in both branches. Picture Nino Credele-level happiness, and that’s what you should be feeling right now upon hearing that.

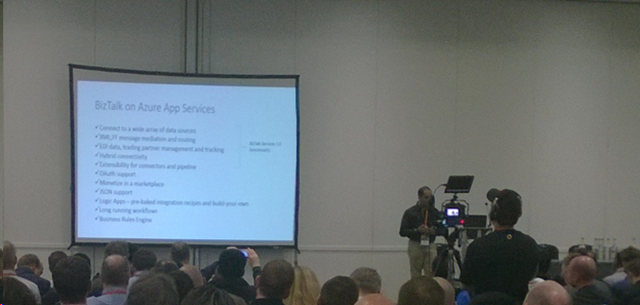

After Stephen left the stage, Prashant Kumar started into his presentation covering BizTalk on App Service. He set the stage by recapping the initial customer feedback on MABS (e.g., more sources/destinations needed out of the box, etc…), and then started to show how Azure App Service capabilities map to BizTalk Server capabilities and patterns that we all know and love:

API Apps

- Connectors: SaaS, Enterprise, Hybrid

- Pipeline Components: VETR

- Extensibility: Connectors and Pipeline

Logic Apps

- Orchestration

- Mediation Pipeline

- Tracking

The interesting one is the dual role of Logic Apps as both Orchestration and Mediation Pipeline (with API App components). So will we build pipeline (VETR focused) Logic Apps ending in a Service Bus connector that could potentially be subscribed to by orchestration (O focused) Logic Apps? Maybe? Either way, that’s a solid way to start the presentation.

After a trip through the slides, Prashant took us to the Azure Portal where all the magic happens. There he demoed a pretty nice scenario wherein we got to see all of the core BizTalk API Apps in action (complete with rules engine invocation, and EDIFACT normalization to a canonical XML model).

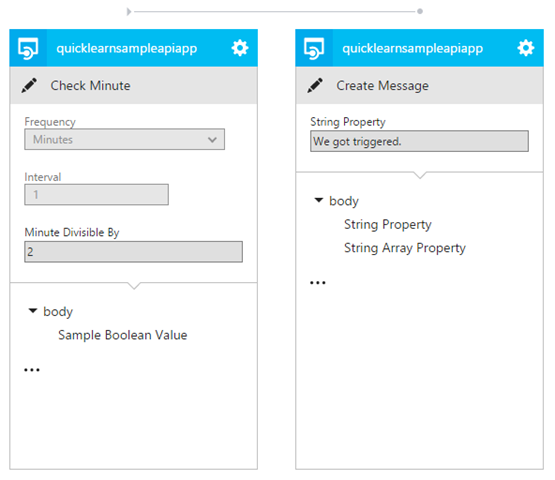

Sameer Chabungbam’s session was the last in the group of sessions diving deep into the world of Azure App Service. His session focused around building a custom connector/trigger (in this case for the Azure Storage Queue). He walked through the API App project template specifics (i.e., its inclusion of required references, and additional files that make it work, plus a quick walk through the helper class for Swagger metadata generation). Most of the specifics that he dealt with centered around custom metadata generation.

I’m going to take a step back for a moment because I sense there has been some confusion based on conversations that I’ve had today. The confusion centers around Swashbuckle and Swagger – both technologies used by API Apps. Neither of these technologies were built by Microsoft, they are both community efforts attempting to solve two different problems. Swagger is trying to solve the problem of providing a uniform metadata format for describing RESTful resources. Swashbuckle is trying to solve the problem of automatically generating swagger metadata for Web API applications. Swashbuckle can optionally provide a UI for navigating through documentation related to the API exposed by the metadata with help from swagger-ui.

With that background in place, essentially Sameer showed how we can make the Logic App designer-UI happy with consuming the metadata in such a way that it displays a connector and trigger in a user-friendly fashion (through custom display names, UI-suppressed parameters, etc…), while also taking advantage of the state information provided by the runtime within the custom trigger.

Generation/modification of the generated metadata can be accomplished through a few different mechanisms (that operate at different levels within the metadata) within Swashbuckle. The methods demonstrated made heavy use of OperationFilters (which control metadata generation at the operation level).

Unfortunately, my seat during this session did not provide a clear view of the screen, so I am unable to share all of the specifics at this time in a really easy fashion, but that is something that I will be writing up in short order in a separate posting.

UPDATE: The code from his talk has now been posted as a sample.

Yes, You Did Hear Jackhammering at Kovai

To kick off the BizTalk 360 presentation, Nino Credele provided comic relief demonstrating BizTalk Nos Ultimate – now the second offering in the BizTalk 360 family. I’ve got to just throw out a big congratulations to Nino especially and to the team at Kovai for pulling together the tool into a full commercial product. It has been really cool to watch it progress over time, and it must have been a special moment to be able to print out the product banners and make it real. Good work! A lot of developers are going to be very happy.

I also want to take time out to thank the BizTalk 360 team for continuing to organize these events – even though they’re not an event management company by trade – it does take a lot of work (and apparently involves walking 10km around the convention center each day).

No Zombies for Michael Stephenson and Oliver Davy

Not content to show off a web-scale, robust, enterprise-grade file copy demo, Michael Stephenson made integration look like magic by recasting the problem of application integration through the lens of Minecraft. While maybe an excuse for just playing around with fun technology, and getting in some solid cool dad time along the way, Michael showed what it might look like when one is forced to challenge established paradigms to extend data to applications and experiences that weren’t considered or imagined in advance.

This effort started during his work with Northumbria University, a university focused on turning integration into an enabler and not a blocker, to envision what the distant future of integration might look like. That future is one where the existence of an integration platform is assumed, and this becomes the fertile soil into which the seeds of curiosity can be sown. This future was positioned as a solution to those systems that were “developed by the business, but [haven’t] really ever been designed by the business. [They have] just grown.” (Oliver Davy – Architecture & Analysis Manager @ Northumbria University).

The approach to designing the integration platform of the future was to layer abstract logical capabilities / services within a core integration platform (e.g., Application Connector Service, Business Service, Integration Infrastructure Service, Service Gateway, API Management), and then, and only then, layer on concrete technologies with considerations for the hosting container of each. Capabilities outside of the core are grouped as extensions to the core platform (e.g., SOA, API & Services, Hybrid Integration, Industry Verticals, etc…). I felt like there were echoes of Shy Cohen’s Ontology and Taxonomy of Services2 paper.

Honestly, there’s some real wisdom in the approach – one that recognizes the additive nature of technologies in the integration spaces inasmuch as it is very rare that some architectural trend replace another trend. It is also an approach that seeks to apply lessons learned instead of throwing them away for shiny objects.

A common theme throughout Michael’s talk was the theme of agile integration. I’m hoping he takes time to expand on this concept further3. In other words, I don’t see “agile integrations” as integrations that fall into the “hacking IT” zone (which limits scope and often sacrifices quality to provide lower cost), but those that are limited in scope to provide lower cost without sacrificing quality.

Stephen Thomas’ Top 14 Integration Challenges

To wrap-up the day, Integration MVP Stephen Thomas shared the top 14 integration challenges that he has seen over the past 14 years (and did really well with a rough time slot):

- Finding Skilled Resources

- Having Too Much Production Access

- Following a Naming Standard

- Developing a Build and Deployment Process

- Understanding the Data

- Using the ESB Toolkit (Incorrectly)

- Planning Capacity Properly

- Creating Automated Unit Tests

- Thinking BizTalk Development is like .NET Development

- Having Environments Out of Sync

- Involving Production Support Too Late

- Allowing Operations to Drive Business Requirements

- Over Architecting

- Integrators Become Too Smart!

With a rich 14-year history in the space (and the ability to live up to his email address), Stephen shared some anecdotes for each of the points to address why they were included in the list.

Takeaway for the Day

I don’t have just one take-away for the day. I’ve written thousands of words here, and have 25 pages of raw unfiltered notes. Again, this is one of those times where I’m dumping information now, and will be synthesizing it in my head for another 6 months before having 1 or 2 moments of sheer clarity.

So on that note, here’s my take-away: people in the integration space are very intelligent, passionate, and driven – ultimately insanely impressive. I’m just happy to be here and I’m hoping that iron does indeed sharpen iron as we all come together and share new toys, battle stories, and proven patterns.

1 Not like @wearsy’s questions.

2 I’m sure just seeing the title of that article would give @MartinFowler heartburn.

3 Though he may have already, and I’m simply unaware.

![]() I rolled all of the code into a library that I’ve named the T-Rex Metadata Library1. The library is available as a NuGet package as well that you can add directly to your API App projects within Visual Studio 2013.

I rolled all of the code into a library that I’ve named the T-Rex Metadata Library1. The library is available as a NuGet package as well that you can add directly to your API App projects within Visual Studio 2013.