I’m going to start off today’s post with some clarifications/corrections from my previous posts.

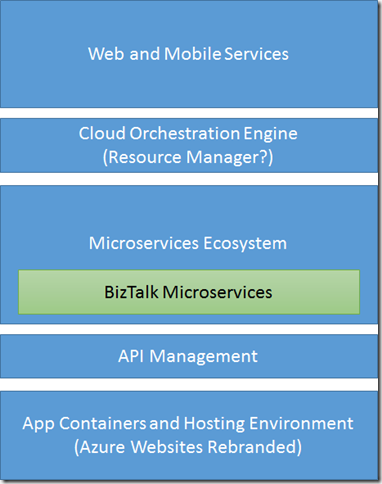

First off – It is now my understanding that the “containers” in which the Microservices will be hosted and executed in are simply a re-branding of the Azure Websites functionality that we already have. This has interesting implications for the Hybrid Connections capability as well – inasmuch as our Microservices essentially inherit the ability to interface directly with on-premise systems as if they were local.

This also brings clarity to the “any language” remark from the first day. In reality, we’re looking at building them in any language supported by Azure Websites (.NET languages, Java, PHP, Node.js, Python) – or truly any language if we host the implementation externally but expose a facade through Azure Websites (at the expense of egress, added latency, loss of auto-load balancing and scale), but I digress.

UPDATE (05-DEC-2014): There are actually some additional clarifications now available here, please read before continuing. Most importantly there is no product called the Azure BizTalk Microservices Platform – it’s just a new style in which Microsoft is approaching building out and componentizing integration (and other) capabilities within the Azure Platform. Second, Azure Resource Manager is a product that sits on top of an engine. The engine is what’s being shared with the new Workflow capability discusssed – not the product itself. You could say it’s similar to how workflow services and TFS builds use the same underlying engine (WF).

The rest of the article remains unchanged because there are simply too many places where the name was called out as if it were a product.

Rules Engine as a (Micro)Service

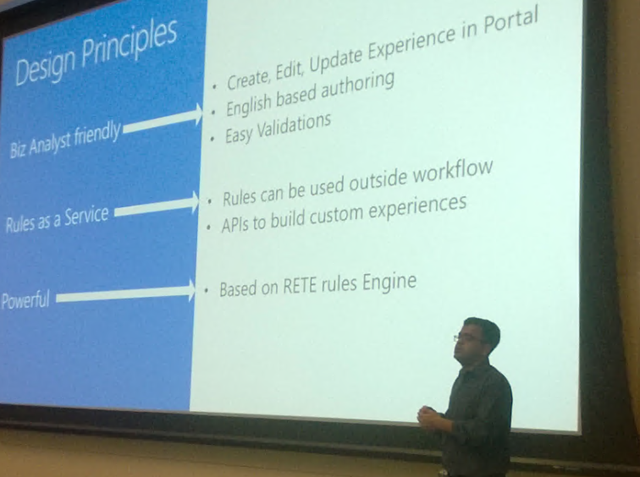

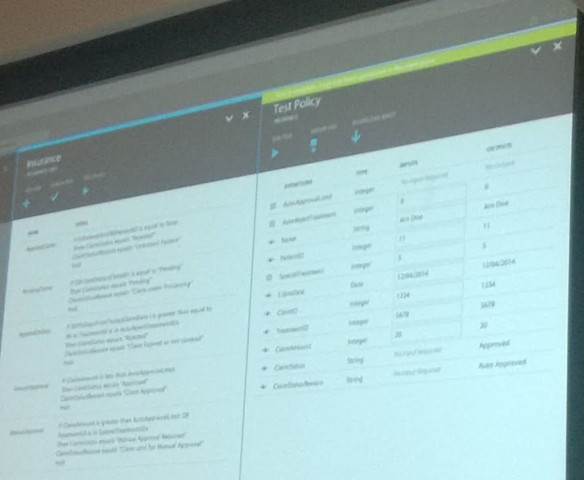

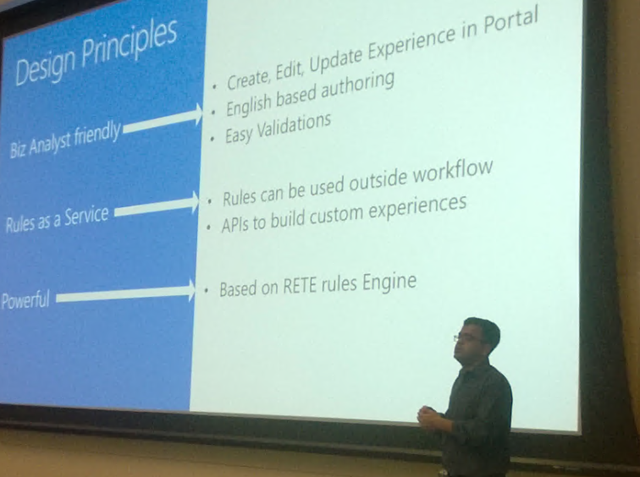

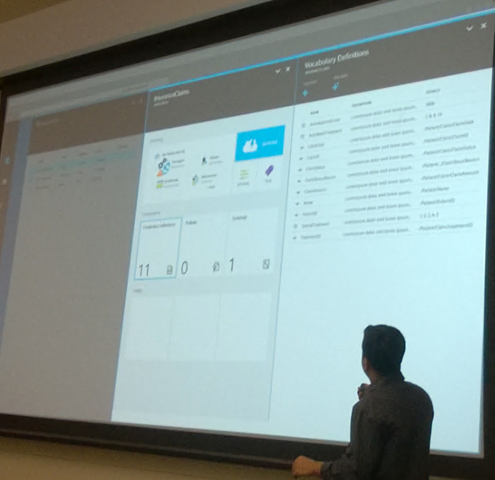

After a long and exciting day yesterday, day 2 of Integrate 2014 got underway with Anurag Dalmia bringing the latest thinking around the re-implementation of the BizTalk Business Rules Engine that is designed to run as a Microservice in the Azure BizTalk Microservices Platform.

First off, this is not the existing BizTalk Rules Engine repackaged for the cloud. This is a complete re-implementation designed for cloud execution and with the existing BRE pain points in mind. From the presentation, it sounds as if the core engine is complete, and all that remains is a new Azure Portal-based design experience (which currently only exists in storyboard form) around designing vocabularies, rules, and policies for the engine.

Currently the (XML-based, not JSON!) vocabularies support:

- Constant & XML based vocabulary definitions

- Single value, range and set of constants

- XML vocabulary definitions (created from uploaded schema)

- Bulk Generation (no details were discussed for this, but I’d be very interested in seeing what that will look like)

- Validation

Missing from the list above are really important things like .NET objects and Database tables, but these are slated for future inclusion. That being said, I’m not sure how exactly custom .NET classes as facts are going to work in a Microservices infrastrcture assuming that each Microservices is an independent isolated chunk of functionality invoked via RESTful interactions. Really, the question becomes how does it get your .dlls so that it can Activator.CreateInstance that jazz? I guess if schema upload can be a thing there, then .dll upload can as well. But then, are these stored in private Azure blob containers, some other kind of repository, or should we even care?

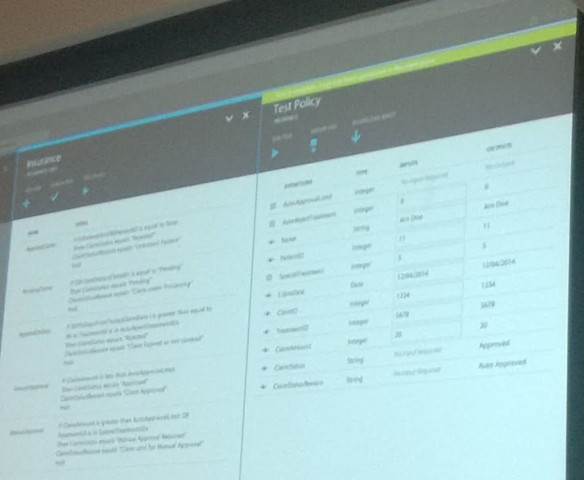

On the actual Rules creation side, things become quite a bit more interesting. Gone is the painful 1 million click Business Rule Composer – instead, free flowing text takes its place. All of this is still happening in a web-based editor that also provides Intellisense-like functionality, tool-tops, and color-coding of special keywords. To get a sense for what these rules look like, here’s one rule that was shown:

If (Condition)

ClaimAmount is greater than AutoApprovalLimit OR

TreatmentID is in SpecialTreatmentIDs

Then (Action)

ClaimStatus equals "Manual Approval Required"

ClaimStatesReason equals "Claim sent for Manual Approval"

Halt

Features of the Rules Engine were said to include:

- Handling of optional XML nodes

- Enable/Disable Rules

- Rule prioritization through drag-and-drop

- Support for Update / Halt Forward Chaining (No Assert?)

- Test Policy (through Web UI, or via Test APIs)

- Schema Management

I’m not going to lie, at that point, I got really concerned with no declared ability to Assert new facts (or to Retract facts for that matter), and I’m hoping that this was a simple omission to the slide, but I do intend to reach out for clarification there.

Building Connectors and Activities

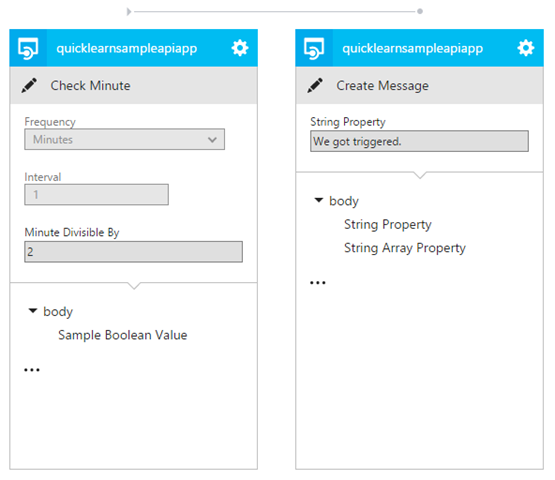

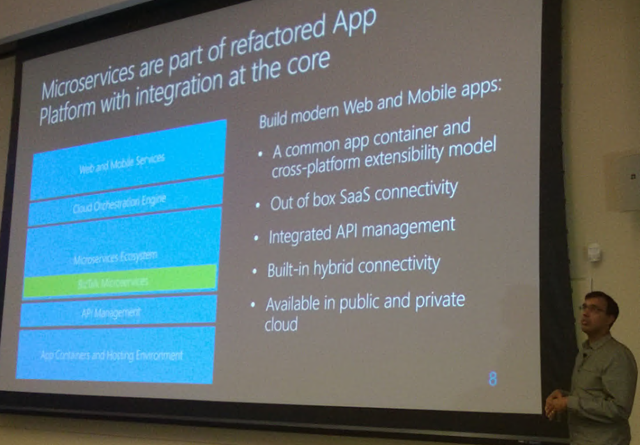

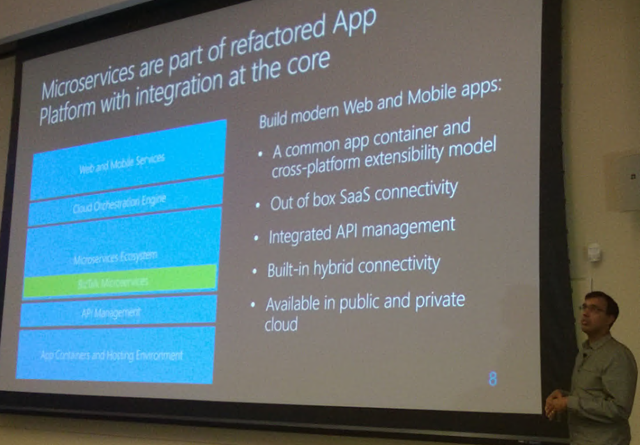

After the session on the Rules Engine, Mohit Srivastava was up to discuss Building Connectors an Activities. The session began, however, with a recap of some of the things that Bill Staples discussed yesterday morning. I’m actually really thankful for this recap as I had missed some things along the way (namely Azure Websites as the hosting container), and I also had a chance to snap a picture of what is likely the most important slide of the entire conference (which I had missed getting a picture of the first time around).

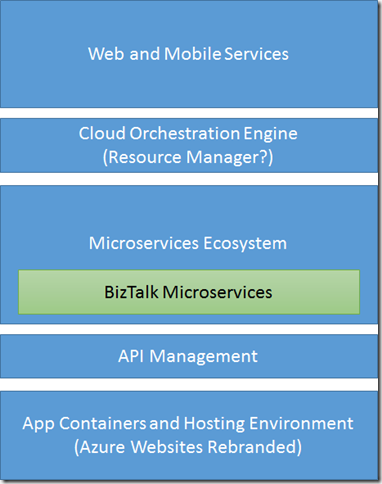

I’ve re-created the diagram of the “refactored” Azure App Platform with a few parenthetical annotations:

One interesting thing about this diagram, when you really think about it, is that the entry point (for requests coming into stuff in the platform) doesn’t have to be from the top down. It can be direct to a capability, or to a process, or to a composed set of capabilities or to a full human friendly UI around any one of those things.

So what are all of the moving pieces that will make it all work?

- Gallery for Microservice Discovery

- Some Microservices will be codeless (e.g., SaaS and On-premises connectors)

- Others will be code (e.g. activities and custom logic)

- Hosting – Azure App Container (formerly Azure Websites)

- Gateway

- Security – identity broker, SSO, secure token store

- Runtime – name resolution, isolated storage, shared config, “IDispatch” on WADL/Swagger (though such metadata is technically optional)

- Proxy – Monitoring, governance, test pages

- Brings all of the value of API management to the gateway out-of-the-box

- Developers

- Writing RESTful services in your language of choice.

To further prove just exactly what a Microservice is, he demoed a sample service starting from just the raw endpoint. You can even look for yourselves here:

- Sample Microservice endpoint

- Sample Metadata endpoint:

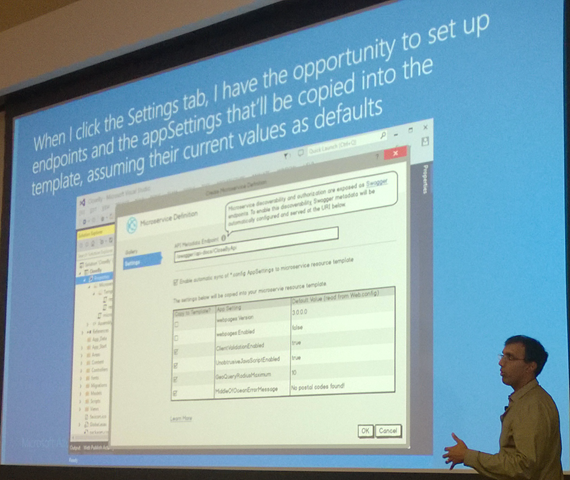

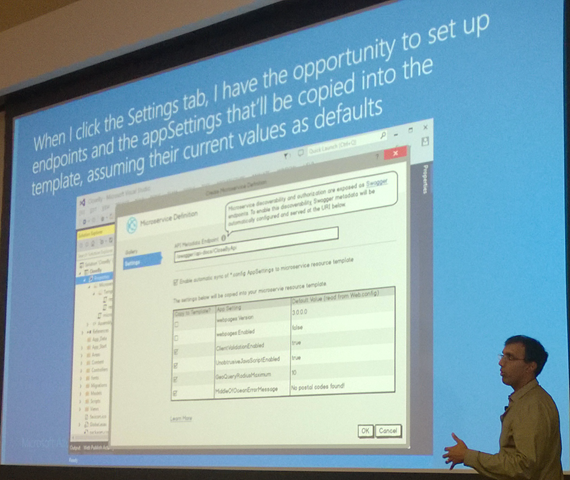

What’s really cool about all of this, is that the tooling support for building such services is going to be baked into Visual Studio. We already have Web API for cleanly building out RESTful services, but the ability to package these with metadata and publish to the gallery (a la NuGet) is going to be included as part of a project template and Publish Web experience. This was all shown in storyboard form, and that’s when I had my moment of developer happiness (much like Nino’s yesterday as he gained reprieve from crying over BizTalk development pain points when first using the productivity tool that he developed).

Finally, we’re getting low enough into the platform that we’re inside Visual Studio and can meaningfully deploy some code – one of the greatest feelings in the whole world.

The talk continued showing fragments of code (that, unfortunately, were too blurry in my photos to capture here) that demonstrated the direct runtime API that Microservices will have access into in order to do things like have encrypted isolated storage, and a mechanism to manage and flow tokens for external SaaS products that are used within a larger workflow. There’s some really exciting stuff here. I honestly could have sat through an entire day of that session just going all the way into it.

But, alas, there were still more sessions to be had.

API Management and Mobile Services

I’m grouping these together inasmuch as they represent functionality within Azure that we have had now for some amount of time (Movile Services certainly longer than API management). I’ve seen quite a bit on these already, and was mainly looking for those touchpoints with the Microservices story.

API Management sits right under Microservices in the diagram shown earlier, and it would make sense that it would become the monetization strategy for developers that want to write/expose a specific capability within Azure. However, that wasn’t explicitly stated, and, in fact, the only direct statement we had was above where we saw that the capabilities of API Management are available within the gateway. That left me a little confused, and I honestly could have missed something obvious there. As much as Josh was fighting PowerPoint, I was fighting my Surface towards the beginning of his talk:

If you’re not familiar with API Management, it provides the ability to put a cloud-hosted wrapper around your API and project it (in the data shaping sense) to choose carefully the exposed resources, actions, and routes through which they can be accessed. It handles packaging your APIs into saleable subscriptions and monitoring their use. That’s a gross oversimplification, and I highly recommend that you dig in right away and explore it because there’s a lot there, and it’s super cool.

That being said, in terms of Microservices, it would be truly great if we could use that to wrap around external services and then turn the Azure hosted portion of the API into a Microservice in such a way that we can even flow back to our external service some of the same information that we can get directly from the APIs that would be available if we were writing within a proper Azure App Container. For example, to be able to request a certain value from the secure store to be passed in a special HTTP Header to our external service –- which could then use that value in any way that it wanted. That would really help speed adoption, as I could quite easily then take any BizTalk Server on-premise capability, wrap a nice RESTful endpoint around it, and not have to worry about authorization, rate-limited, or re-implementation.

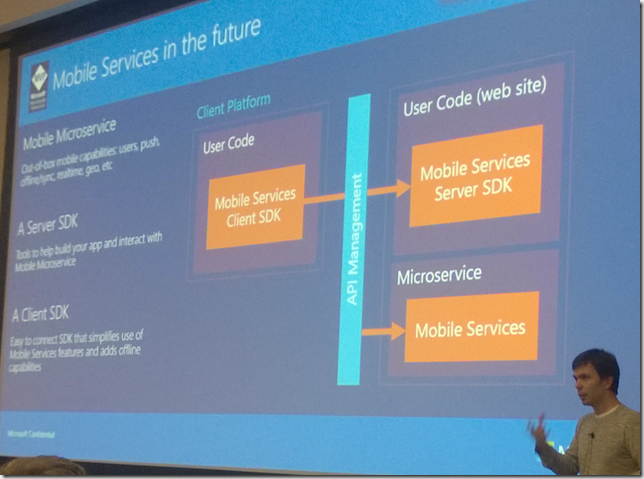

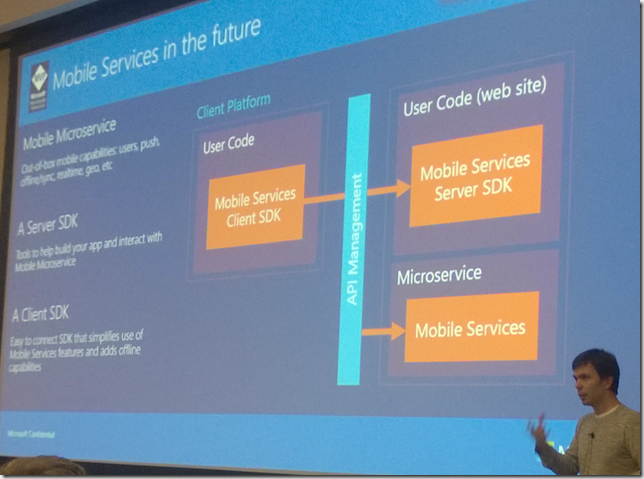

Next up was Kirill Gavrylyuk rocking Xamarin Studio on a Mac to talk about Mobile Services (he even went for a Hat-trick and launched an Android emulator). He actually did feature a slide towards the end of his talk showing the enterprise/non-consumer-centric Mobile Services development experience by positioning Mobile Services within the scope of the refactored Azure App Platform:

I’m going to let that one speak for itself for now.

Those two talks were a lot of fun, and I don’t want to sell them short by not writing as much, but there’s certainly already a lot of information already out there for these ones.

Big Data With Azure Data Factory & Power BI

The day took a little bit of a shift after lunch as we saw a few talks on both Azure Data Factory and Power BI. In watching the demos, and seeing those talks, it’s clear that there’s definitely some really exciting stuff there. Sadly, I’m already out-of-date in that area, as there were quite a few things mentioned that I was entirely unaware of (e.g., Azure Data Factory itself). For now, I’ll leave any coverage of those topics to the BI and Big Data experts – which I will be the first to admit is not me. I don’t think in more than 4 dimensions at a time – though with Power BI maybe all I need to know how to do is to speak English.

For all of those out there that spend their days writing MDX queries, I salute you. You deserve a raise, no matter what you’re being paid.

HCA Rocks BizTalk Server 2013 R2

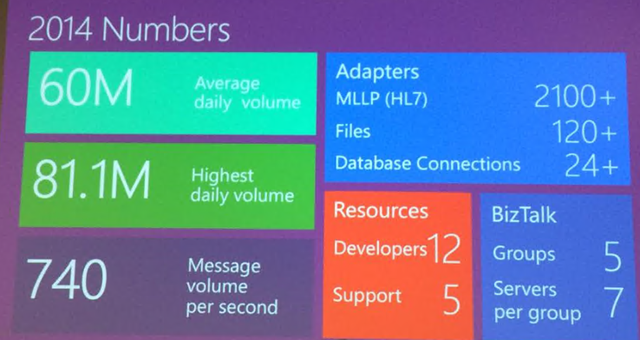

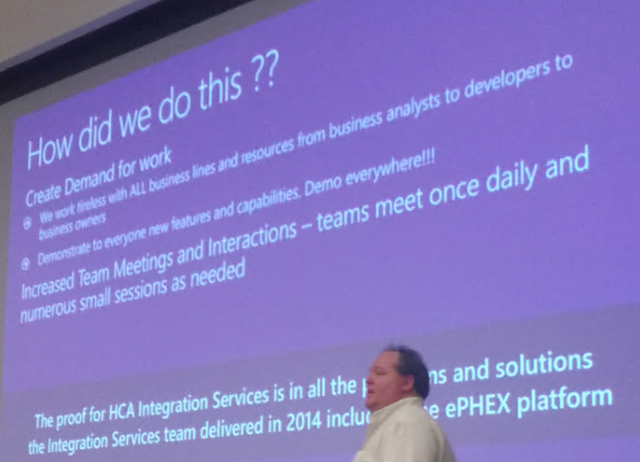

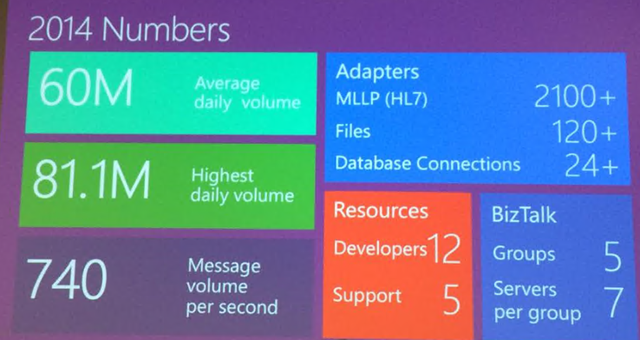

For the last talk of the day, Alan Scott from HCA and Todd Rivers from Microsoft presented on HCA’s use of BizTalk Server 2010 & 2013 R2 for processing HL7 workloads (and MSMQ + XML) workloads. The presentation was excellent, and it’s going to be really difficult to capture it here. One of the most impressive things (besides their own web-based rules editing experience) is the sheer scale of the installation:

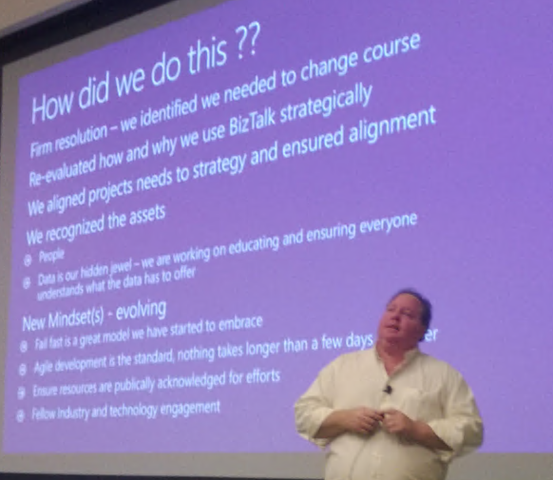

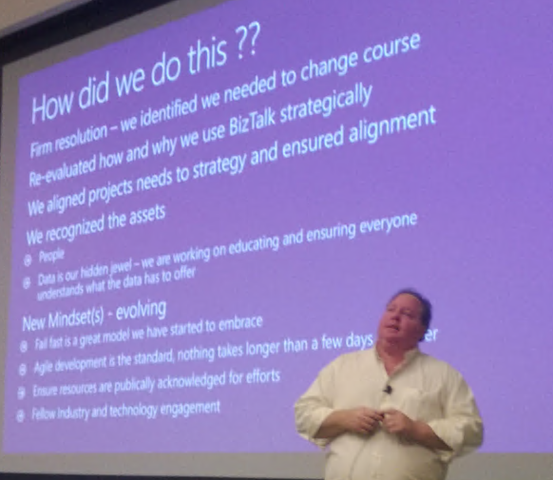

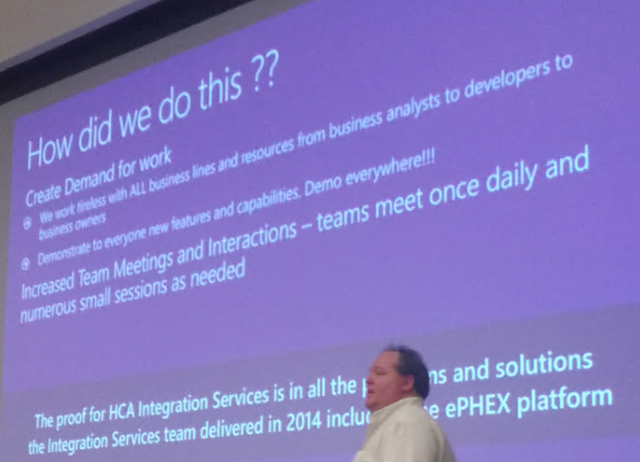

Cultural Change Reaps Biggest Rewards – Value People Not Software

The presentation really highlighted not only the flexibility of the BIzTalk platform, but the power of having a leader that is able to evangelize the capability to the business – while being careful to not talk in terms of the platform, but in terms of the people and the data, and also while equipping the developers with the tools they will need to succeed with that platform.

Looking Forward

Looking forward beyond today, I’m getting really excited to see the direction that we’re headed. We still have a rock solid platform on-premise alongside a hyper-flexible distributed platform brewing in the cloud.

To that end, I actually want to announce today that QuickLearn Training will be hosting an Azure BizTalk Microservices Hackathon shortly after the release of the public preview. It will be a fun time to get together and look through it all together, to discuss which microservices will be valuable, and most of all to build some together that can provide value to the entire community.

If any community is up for that, I know it’s the BizTalk community. I’m just really excited that there’s going to be a proper mechanism to surface those efforts so that anyone who builds for the platform will have it at their disposal without worries.

If you want more details, or you want to join us (physically, or even remotely) when that happens, head over here: http://bit.ly/1AcMWIy

For that matter, if you want to host one in your city at the same time and connect up with us here in Kirkland, WA via live remote feed, that would be great too 😉 Let’s build the future together.

Well, that’s all for now! Take care!

![]() I rolled all of the code into a library that I’ve named the T-Rex Metadata Library1. The library is available as a NuGet package as well that you can add directly to your API App projects within Visual Studio 2013.

I rolled all of the code into a library that I’ve named the T-Rex Metadata Library1. The library is available as a NuGet package as well that you can add directly to your API App projects within Visual Studio 2013.