This is the fourth in a series of posts exploring What’s New in BizTalk Server 2013 R2. It is also the second in a series of three posts covering the enhancements to BizTalk Server’s support for RESTful services in the 2003 R2 release.

In my last post, I wrote about the support for JSON Schemas in BizTalk Server 2013 R2. I started out with a small project that included a schema generated from the Yahoo Finance API and a unit test to verify the schema model. I was going to put together a follow-up article last week, but spent the week traveling, in the hospital, and then recovering from being sick.

However, I am back and ready to tear apart the next installment that already hit the github repo a few days back.

Pipeline Support for JSON Documents

In BizTalk Server 2013 R2, Microsoft approached the problem of dealing with JSON content in a way fairly similar to the approach that we used in the previous version with custom components – performing the JSON conversion as an operation in the Decode stage of the pipeline, thus requiring the Disassemble stage to include an XMLDisassemble component for property promotion.

The official component Microsoft.BizTalk.Component.JsonDecoder takes in two properties Root Node and Root Node Namespace that help determine how the XML message will be created.

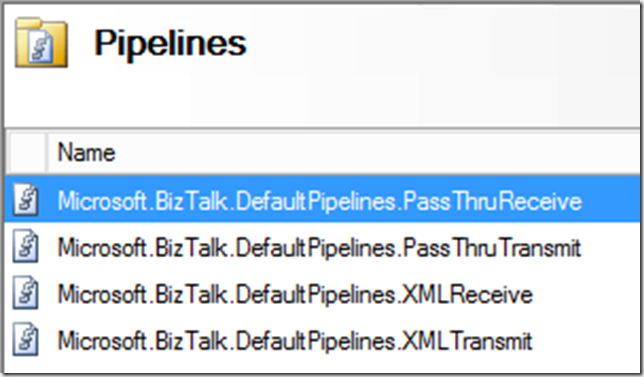

Finally, there isn’t a JSONReceive pipeline included in BizTalk Server 2013 R2 – only the pipeline component was included. In other words, in order to work with JSON, you will need a custom pipeline.

Creating a Pipeline for Receiving JSON Messages

Ultimately, I would like to create a pipeline that is going to be reusable so that I don’t have to create a new pipeline for each and every message that will be received. Since BizTalk message types are all about the target namespace and root node name, it’s not reasonable to set that value to be the same for every message – despite having different message bodies and content. As a result, it might be best to leave the value blank and only set it at design time.

This is also an interesting constraint, because if we are receiving this message not necessarily just as a service response, we might end up needing to create a fairly flexible schema (i.e., with a lot more choice groups) depending on the variety of inputs / responses that may be received – something that will not be explored within this blog post, but would be an excellent discussion to bring up during one of QuickLearn’s BizTalk Server Developer Deep Dive classes.

In order to make the pipeline behave in a way that will be consistent with typical BizTalk Server message processing, I decided to essentially take what we have in the XMLReceive pipeline and simply add a JsonDecoder in the Decode stage, with none of its properties set at design time.

Testing the JSONReceive Pipeline

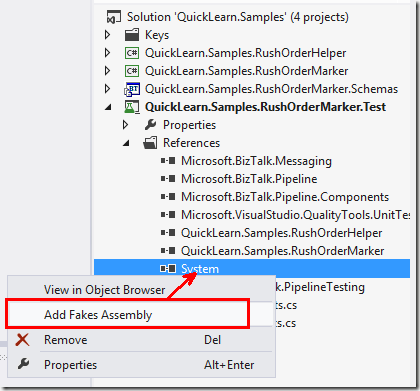

In the same vein as my last post, I will be creating automated tests for the pipeline to verify its functionality. However, we cannot use the built-in support for testing pipelines in this case – because properties of the pipeline were left blank, and the TestablePipelineBase class does not support per instance configuration. Luckily, the Winterdom PipelineTesting library does support per instance configuration – and it has a nifty NuGet package as of June.

Unfortunately, the per-instance configuration is not really pretty. It requires an XML configuration file that resembles the guts of a bindings file in the section dedicated to the same purpose. In other words, it’s not as easy as setting properties on the class instance in code in any way. To get around that to some degree, and to be able to reuse the configuration file with different property values, I put together a template with tokens in place of the actual property values.

NOTE: If you’re copying this approach for some other pipeline components, the vt attribute is actually very important in ensuring your properties will be read correctly. See KB884981 for details.

From there, the per-instance configuration is a matter of XML manipulation and use of the ReceivePipelineWrapper class’ ApplyInstanceConfig method:

[sourcecode language=”csharp”]

private void configureJSONReceivePipeline(ReceivePipelineWrapper pipeline, string rootNode, string namespaceUri)

{

string configPath = Path.Combine(TestContext.DeploymentDirectory, "pipelineconfig.xml");

var configDoc = XDocument.Load(configPath);

configDoc.Descendants("RootNode").First().SetValue(rootNode);

configDoc.Descendants("RootNodeNamespace").First().SetValue(namespaceUri);

configDoc.Save(configPath);

pipeline.ApplyInstanceConfig(configPath);

}

[/sourcecode]

The final test code includes a validation of the output against the schema from last week’s post. As a result, we’re really dealing with an integration test here rather than a unit test, but it’s a test nonetheless.

[sourcecode language=”csharp”]

[TestMethod]

[DeploymentItem(@"Messages\sample.json")]

[DeploymentItem(@"Configuration\pipelineconfig.xml")]

public void JSONReceive_JSONMessage_CorrectValidXMLReturned()

{

string rootNode = "ServiceResponse";

string namespaceUri = "http://schemas.finance.yahoo.com/API/2014/08/";

string sourceDoc = Path.Combine(TestContext.DeploymentDirectory, "sample.json");

string schemaPath = Path.Combine(TestContext.DeploymentDirectory, "ServiceResponse.xsd");

string outputDoc = Path.Combine(TestContext.DeploymentDirectory, "JSONReceive.out");

var pipeline = PipelineFactory.CreateReceivePipeline(typeof(JSONReceive));

configureJSONReceivePipeline(pipeline, rootNode, namespaceUri);

using (var inputStream = File.OpenRead(sourceDoc))

{

pipeline.AddDocSpec(typeof(ServiceResponse));

var result = pipeline.Execute(MessageHelper.CreateFromStream(inputStream));

Assert.IsTrue(result.Count > 0, "No messages returned from pipeline.");

using (var outputFile = File.OpenWrite(outputDoc))

{

result[0].BodyPart.GetOriginalDataStream().CopyTo(outputFile);

outputFile.Flush();

}

}

ServiceResponse schema = new ServiceResponse();

Assert.IsTrue(schema.ValidateInstance(outputDoc, Microsoft.BizTalk.TestTools.Schema.OutputInstanceType.XML),

"Output message failed validation against the schema");

Assert.AreEqual(XDocument.Load(outputDoc).Descendants("Bid").First().Value, "44.97", "Incorrect Bid amount in output file");

}

[/sourcecode]

After giving it a run, it looks like we have a winner.

Coming Up in the Next Installment

In the next installment of this series, I will actually put to use what we have here, and build out a more complete integration that allows us to experience sending JSON messages as well, using the new JsonEncoder component.

Take care until then!

If you would like to access sample code for this blog post, you can find it on github.